As large language models (LLMs) integrate into the core of modern search engines, they are fundamentally changing how search results are processed, filtered, and presented.

The shift is subtle but significant: ranking #1 is no longer the only goal - being in the top relevant set that an LLM can reason over is now just as critical.

This article outlines how LLM-augmented search systems work, and how content creators can adapt to remain visible and relevant in the new paradigm.

What Do We Mean by a 'Top Set'?

In traditional search, content creators aimed to rank #1 or on the first page. But LLM-powered search doesn't work the same way.

Instead of relying solely on ordered lists, LLMs evaluate a pool of documents—the "retrieved set"—and then reason across the most relevant ones to generate an answer.

This means the goal isn't winning rank #1. It's to make it into the top set of high-signal sources that the LLM selects and reads from.

Being part of this top set is what determines whether your brand gets cited, summarized, or even mentioned at all in AI-generated answers.

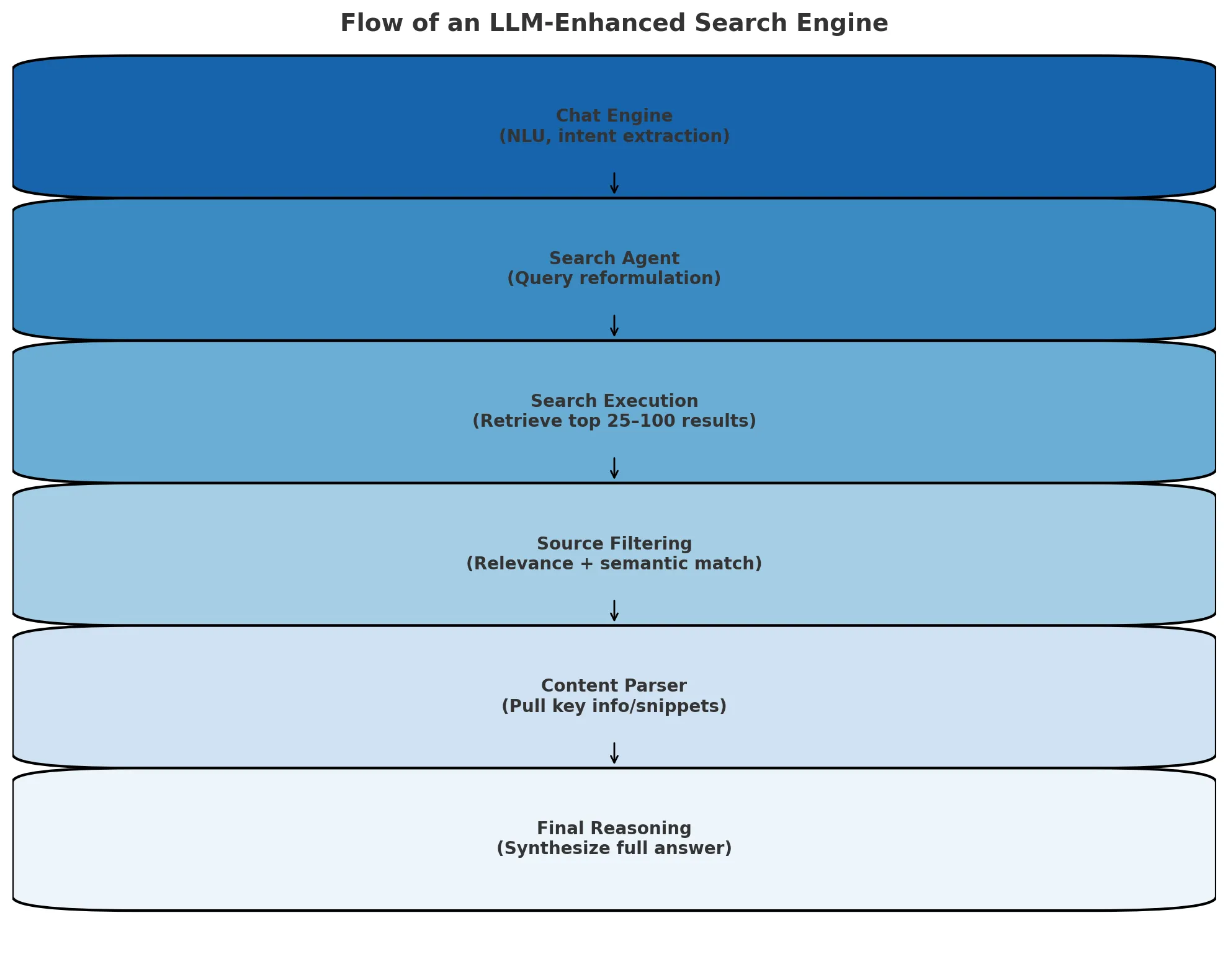

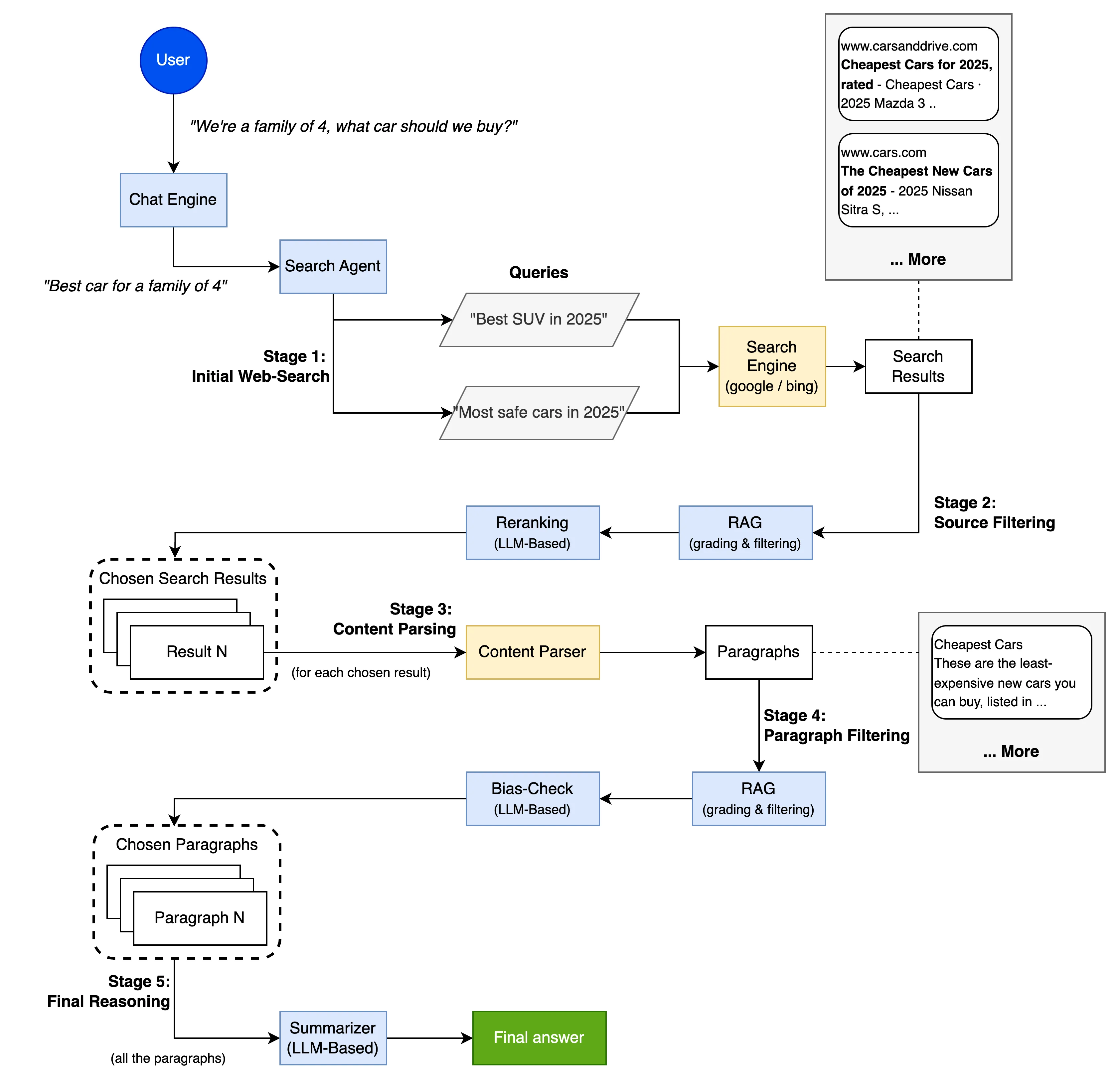

The Flow of an LLM-Enhanced Search Engine

1. Chat Engine

The LLM receives the user’s natural language prompt and extracts intent, context, and key data points.

Example: “What’s the safest car for a family of four?” might yield tags like: “family car”, “safety”, “four passengers”.

1.1 What Happens Here

The model parses the prompt using advanced natural language understanding (NLU) to detect user goals and reframe the request into structured, search-ready queries.

2. Search Agent

The prompt is reformulated into multiple traditional search queries, covering different facets of the user’s intent.

Example: “Best cars for families” and “Top-rated safe vehicles 2025.”

2.1 What Happens Here

LLMs generate these search variants using their understanding of semantic structures - enabling more comprehensive information retrieval.

3. Search Execution

Queries are passed to a conventional search engine (like Bing or Google), returning a broad list of potential sources.

3.1 What Happens Here

Standard algorithms process the queries and return a results set, typically between the top 25–100 web results, including title, snippets, and metadata.

4. Source Filtering

The LLM evaluates the retrieved sources not by rank, but by relevance, clarity, and semantic alignment with the user’s intent.

4.1 What Happens Here

The model reviews:

- Page titles and metadata

- Snippets and visible headings

- Semantic match to the user prompt

The goal is to reduce noise and identify a refined pool - typically 3–20 high-signal sources.

5. Content Parsing

From the filtered pages, the LLM pulls specific passages or structured data. It prioritizes factual precision and clarity.

6. Final Reasoning

The model synthesizes an answer from the selected content. This may include summaries, comparisons, and even citations.

6.1 What Happens Here

Using techniques like Retrieval-Augmented Generation (RAG), the LLM blends the retrieved content into a coherent, accurate response.

How to Make It Through the “First Cut”

Why You May Be Getting Filtered Out

Many brands optimize for rank, not for the criteria that LLMs actually use to select sources. Your title, metadata, and content snippet are now part of your first-impression filter.

Practical Optimization Tips

- Optimize Your Title Tags: Use natural language, semantic clarity, and keywords aligned with real queries.

- Craft Meta Descriptions Carefully: Write for both users and machines - be informative, not just clickbait.

- Elevate Your Snippets: Make your first 1–2 paragraphs information-dense and contextually aligned.

- Use Structured Data Markup: Schema.org helps AI parse your content as structured knowledge.

- Focus on Semantic Relevance: LLMs look beyond keywords. Use related terms, context cues, and varied phrasing.

Final Thoughts: SEO is Evolving

LLM search doesn’t eliminate traditional SEO - but it reframes what it means to be visible.

Success isn’t about ranking #1 - it’s about making it into the LLM’s trusted input set. That’s how your brand becomes part of the answer.

We’re entering an era where “top rank” is being replaced by “top set.” And your content strategy needs to follow.

.png)

.webp)

.webp)